In an increasingly digital landscape, where new technologies, such as ChatGPT, are being inserted into our daily lives, data security has become an unquestionable priority.

Companies and individuals are constantly in the crosshairs of cybercriminals, willing to exploit any breach to gain unauthorized access to confidential information.

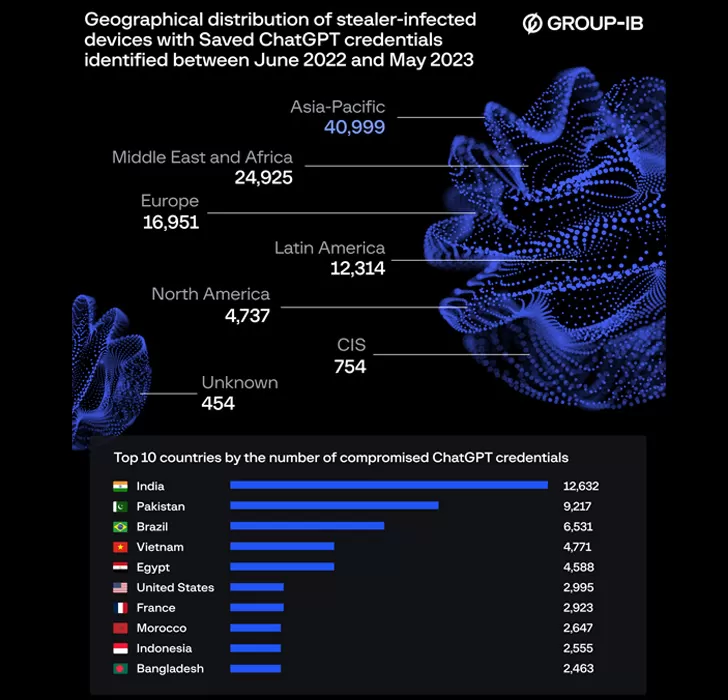

An alarming example of this reality is the recent leak of more than 100,000 account credentials from ChatGPT, a widely used artificial intelligence tool from OpenAI, whose information ended up being sold on illicit markets on the dark web.

This incident not only underscores the growing sophistication of cyber attacks, but also highlights the urgency of robust data security strategies to protect corporate and personal information from these threats.

ChatGPT Credentials Leak ChatGPT credentials

The ChatGPT credentials leak, one of the largest data security incidents since its inception on November 30, 2022, represented a worrisome milestone in the enterprise cybersecurity landscape.

More than 100,000 ChatGPT accounts have been compromised, with their credentials found for sale in various illicit marketplaces on the dark web, highlighting the breadth and depth of the problem.

The modus operandi consists of

Information Stealers

or information thieves, malicious software that specializes in stealing personal and corporate identifying information.

Three Info Stealers, as they are also known, stood out in this operation: Raccoon, Vidar and RedLine. These have proven to be especially effective, accounting for a significant portion of the credentials stolen and sold on Internet black markets.

These info stealers, in addition to stealing login credentials, also have the ability to hijack passwords, cookies, credit card information, and other sensitive data from browsers and cryptocurrency wallet extensions.

A worrisome scenario for companies that are incorporating ChatGPT into their operations

The ChatGPT credentials leak resonates with particular intensity in Brazil. The country unfortunately stood out as the third most affected by this data compromise, with a significant number of compromised ChatGPT credentials found for sale on the Dark Web.

This puts both Brazilian companies and individuals in a potentially high-risk position.

The situation is even more worrisome when we consider the growing role that ChatGPT plays in business operations

Many organizations, especially those in the technology sector, are integrating ChatGPT into their operational flows to optimize various processes, from customer service to data analysis.

However, this integration has its pitfalls. Given the default ChatGPT configuration, which retains all conversations, confidential business information and classified correspondence can be exposed if account credentials are compromised.

This vulnerability could inadvertently provide a goldmine of sensitive intelligence for cybercriminals if they gain access to ChatGPT accounts.

This highlights the urgent need for robust security practices in businesses, particularly those that rely on tools like ChatGPT in their daily operations.

Information security, data protection, and data privacy should not be an afterthought, but vital components of any company’s operational strategy.

The importance of data security and best practices for corporate use of ChatGPT

The advent of info stealers, who have demonstrated effectiveness in hijacking passwords, cookies, credit card data, and other sensitive information from browsers and cryptocurrency wallet extensions, presents a significant challenge for companies using AI tools like ChatGPT in the corporate environment.

The use of ChatGPT by businesses and organizations has grown in popularity due to its ability to streamline processes and improve operational efficiency.

However, the default ChatGPT configuration, which retains all conversations, can inadvertently provide a great deal of sensitive information to criminals, should they be able to obtain the prompts and their outcome.

This can include details of classified correspondence and proprietary codes, creating a significant security risk for companies.

Mitigating risk is key to integrating enterprise use with the benefits of ChatGPT

To mitigate these risks, it is crucial that companies adopt robust password hygiene practices and implement security measures such as two-factor authentication (2FA) on their internal systems.

Two-factor authentication offers an additional layer of protection because it requires users to verify their identity using two different methods before accessing their accounts.

This can effectively prevent account takeover attacks, even if a user’s credentials are compromised.

In addition, companies should consider implementing data security policies that limit the type of information that can be shared through ChatGPT.

This, along with regular employee education on data security best practices, can help minimize the risk of compromise to company information.

It is vital that companies recognize the need to incorporate robust security, data protection and privacy practices into their use of ChatGPT and other AI tools.

In this way, they can enjoy the benefits of these advanced technologies while remaining secure against cyber threats.

About Eval

Eval has been developing projects in the financial, health, education, and industry segments for over 18 years. Since 2004, we have offered solutions for Authentication, Electronic and Digital Signature, and Data Protection. Currently, we are present in the main Brazilian banks, health institutions, schools and universities, and different industries.

With recognized value by the market, Eval’s solutions and services meet the highest regulatory standards of public and private organizations, such as SBIS, ITI, PCI DSS, and LGPD (General Law of Data Protection). In practice, we promote information security and compliance, increase companies’ operational efficiency, and reduce costs.

Innovate now, lead always: get to know Eval’s solutions and services and take your company to the next level.